Isaac 0.1: the First Perceptive-language Model on fal

While AI has transformed digital interactions, the physical world - with its dynamic environments and real-time demands - has remained out of reach. That’s why we’re excited to announce our partnership with Perceptron.

Founded by the team behind Meta’s Chameleon multimodal models, Perceptron introduces breakthrough capabilities in physical-world understanding: AI that is more capable, efficient, and adaptable to the realities of the world around us.

Perceptive models, built for real-world understanding

The first in the Perceptron family, Isaac 0.1 delivers breakthrough capabilities in grounding, spatial reasoning, and in-context visual learning. At just 2B parameters, it outperforms models 50× larger, making real-time and edge deployments practical for developers.

Key capabilities

- Visual QA → Outperforms all models on perceptive benchmarks while using simpler training recipes

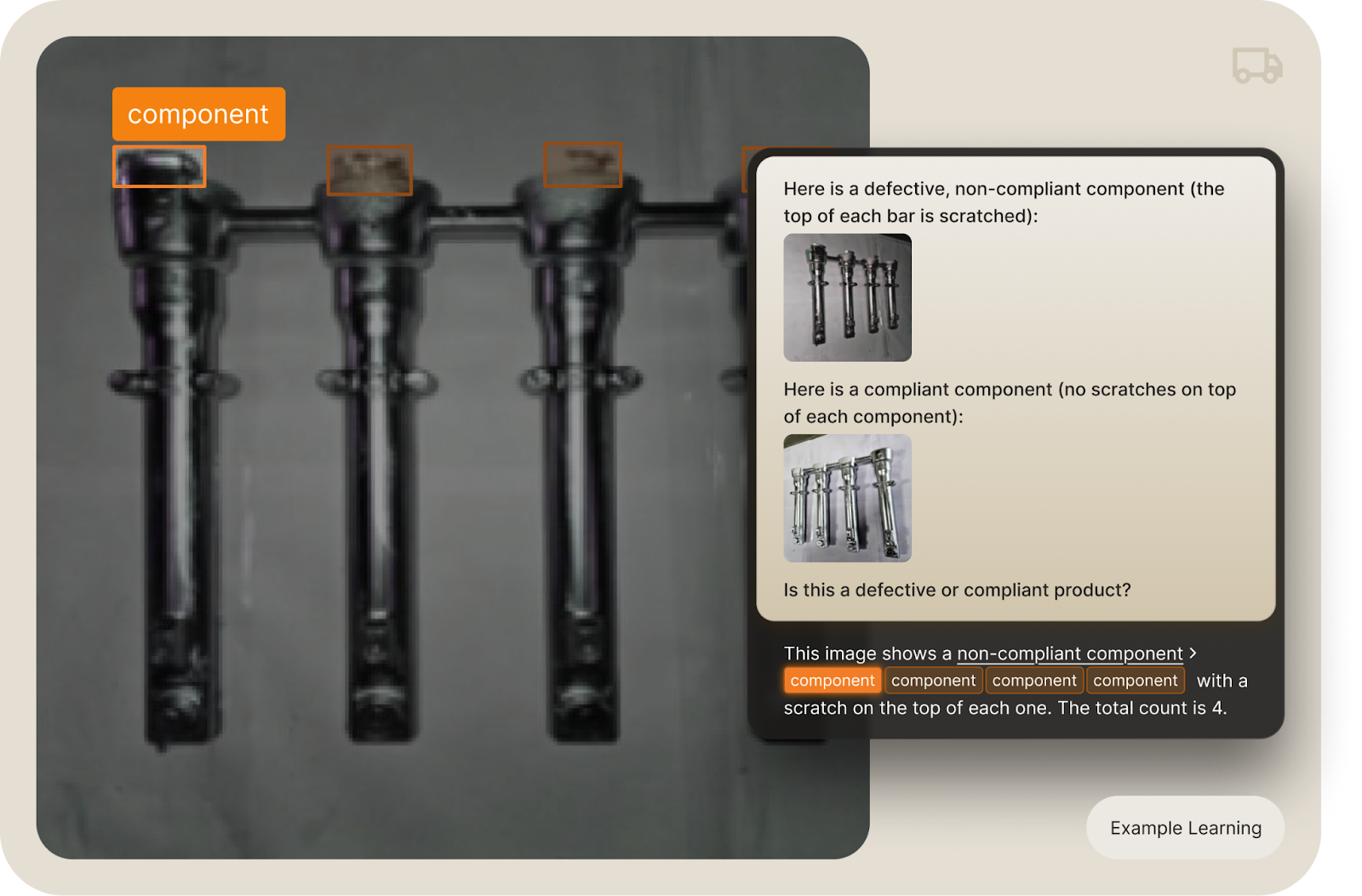

- In-context learning → Learns new visual concepts from just a few annotated examples, precluding the need for traditional stacks like YOLO

- Grounding accuracy → Superior pointing and localization, critical for real-world applications

- Efficiency, edge, and latency → Our 2B model outperforms significantly larger models (100B+ params) on key perceptive benchmarks

Designed for real-word applications

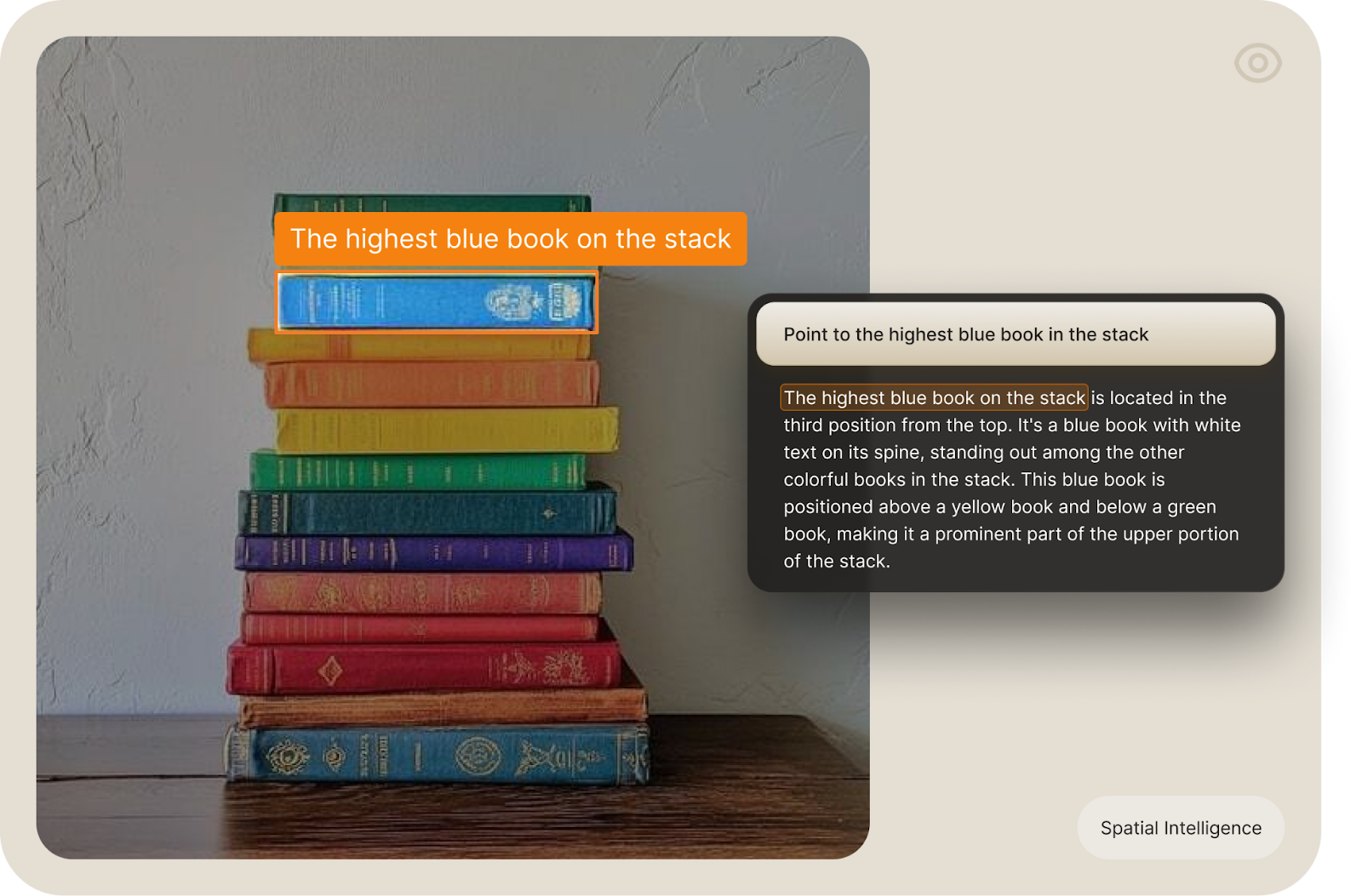

Best-in-class spatial intelligence

Isaac 0.1 can point to exact objects, components, or details in an image, answering questions like “what’s broken here?” or “point to the highest blue book?”. It understands occlusions, spatial relationships, and object interactions, with video reasoning coming soon.

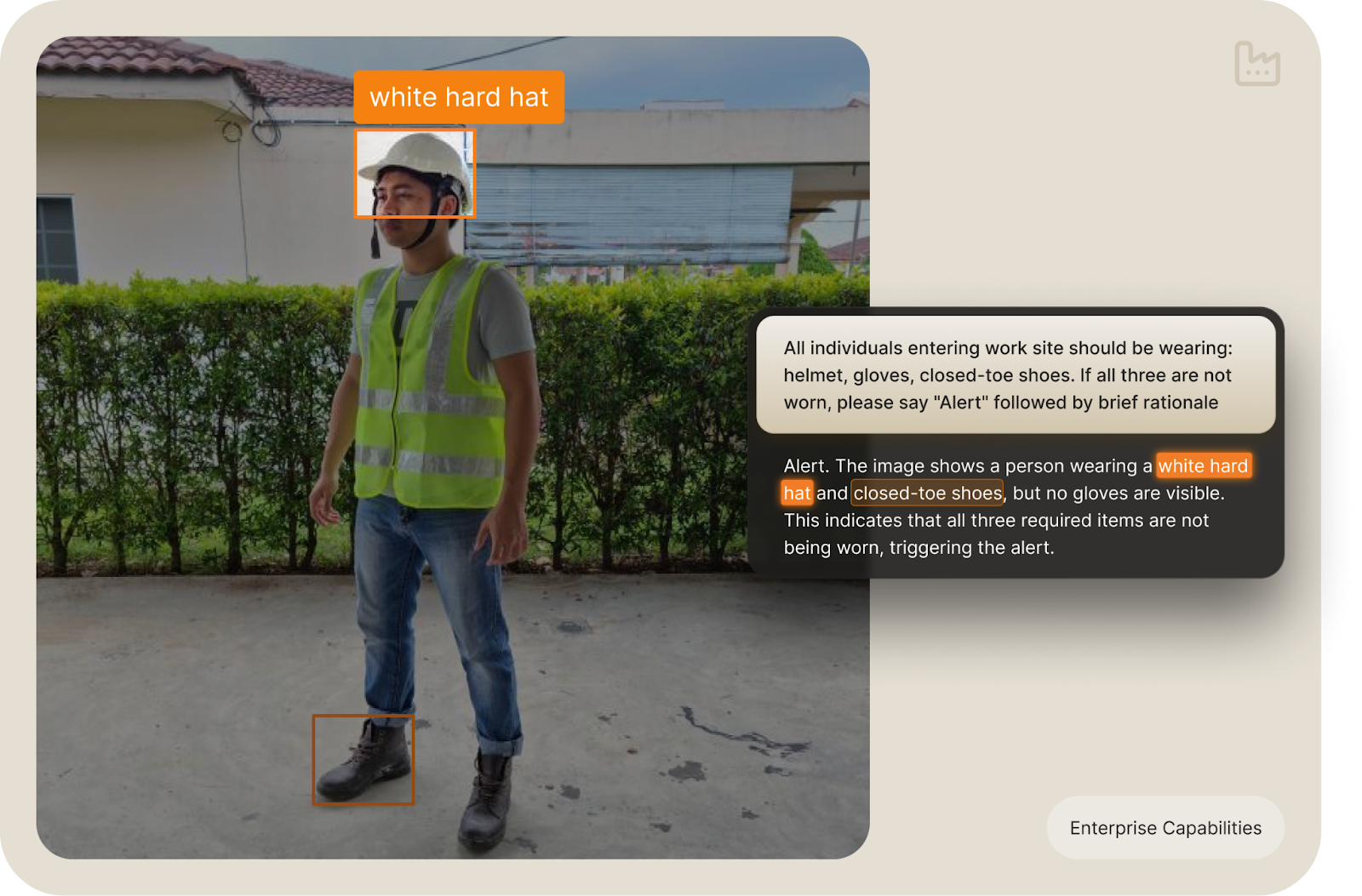

Enterprise-grade capabilities

The model detects missing PPE, unsafe equipment use, or subtle defects in manufacturing lines, highlighting not just what’s wrong but where. It also supports security use cases like spotting anomalies, unauthorized access, or abandoned objects in real time.

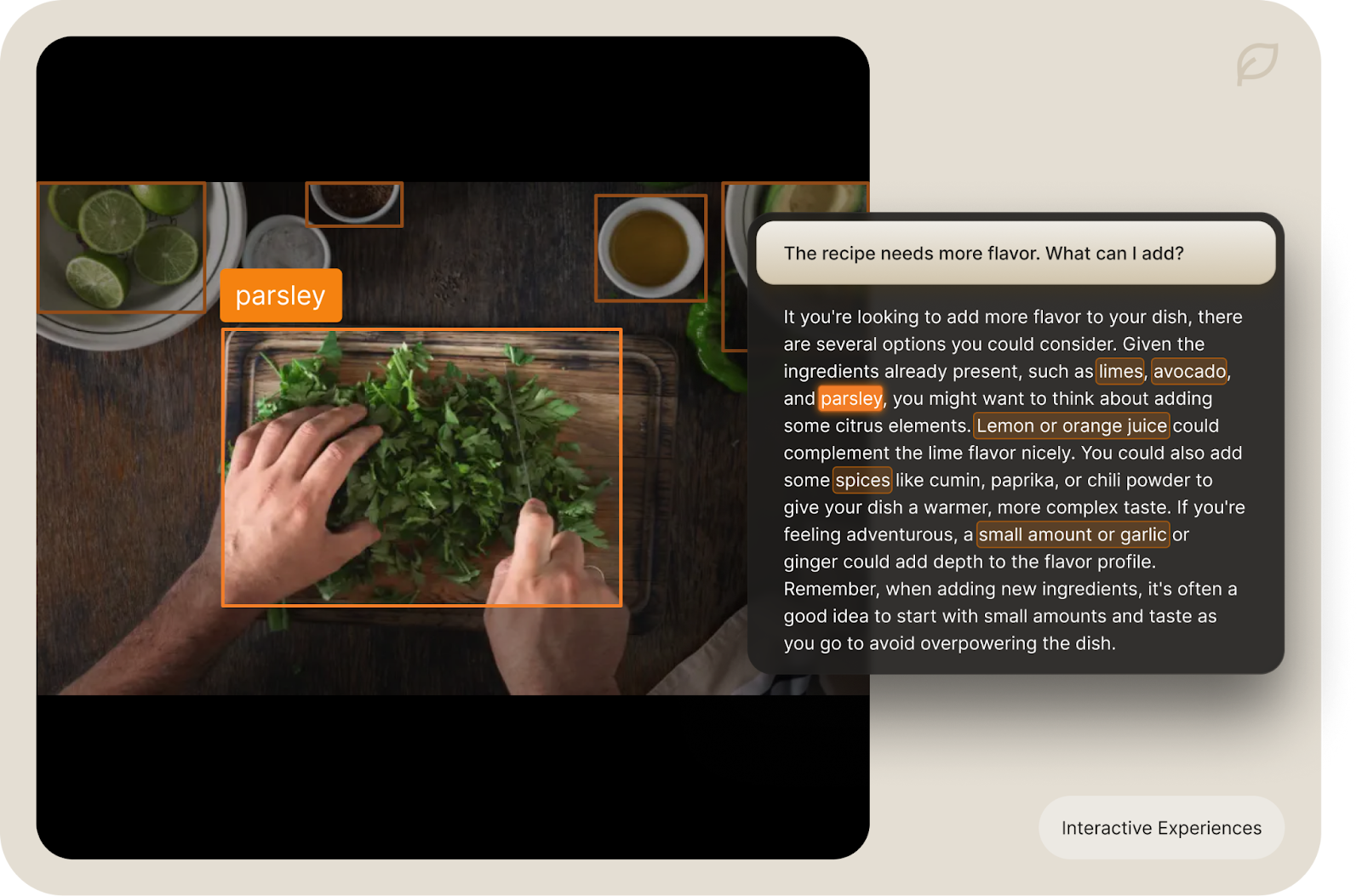

Interactive experiences

Developers can build real-time apps that give repair guidance, improve athletic form, or power AR-style cooking assistants that identify and suggest ingredient substitutions.

In-context learning

Provide a few annotated examples, and the model adapts immediately, learning your defect standards or safety conditions without retraining or fine-tuning.

How to try it

- Go to: Isaac 0.1

- Upload any image(s)

- Write a question (e.g., “Where are the cars?”) or command (e.g., “Identify any cars”) to direct the model’s output

- Try more complex queries like:

- What’s the meaning behind this scene?

- Which objects moved between these two images?

- Does this manufactured part have the described defect? (be sure to provide a visual representation of of the defect you wish to detect)

Start creating today

Isaac 0.1 is now live on fal. Explore it in the playground, experiment with your own images and questions, or bring it into your apps and workflows.

For the first time, you can build directly with a perceptive-language model that understands and reasons about the physical world.

Try Isaac 0.1 on FAL or test it on Perceptron’s demo page now.