The Unbundling of Airflow

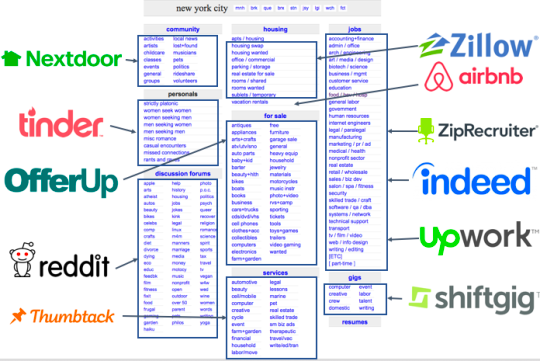

In each subdomain of software, we have seen the same story over and over again. Products start small, in time, add adjacent verticals and functionality to their offerings and end up becoming a platform. Once these platforms become big enough, people start to figure out how to better serve neglected verticals or abstract out functionality in order to break it down into purpose-built chunks, and the unbundling starts. The most classic example of this is the unbundling of Craigslist. You have probably seen a version of this diagram on the internet somewhere:

In data world, it's hard to figure out what makes a platform, but luckily some tools self-advertise themselves as such. Airflow is one of them:

Airflow is a platform created by the community to programmatically author, schedule and monitor workflows.

One might consider Airflow a workflow manager, but undoubtedly the flexibility of the product allowed it to take on additional responsibilities. Heavy users of Airflow can do a vast variety of data related tasks without leaving the platform; from extract and load scripts to generating reports, transformations with Python and SQL to syncing back data to BI tools.

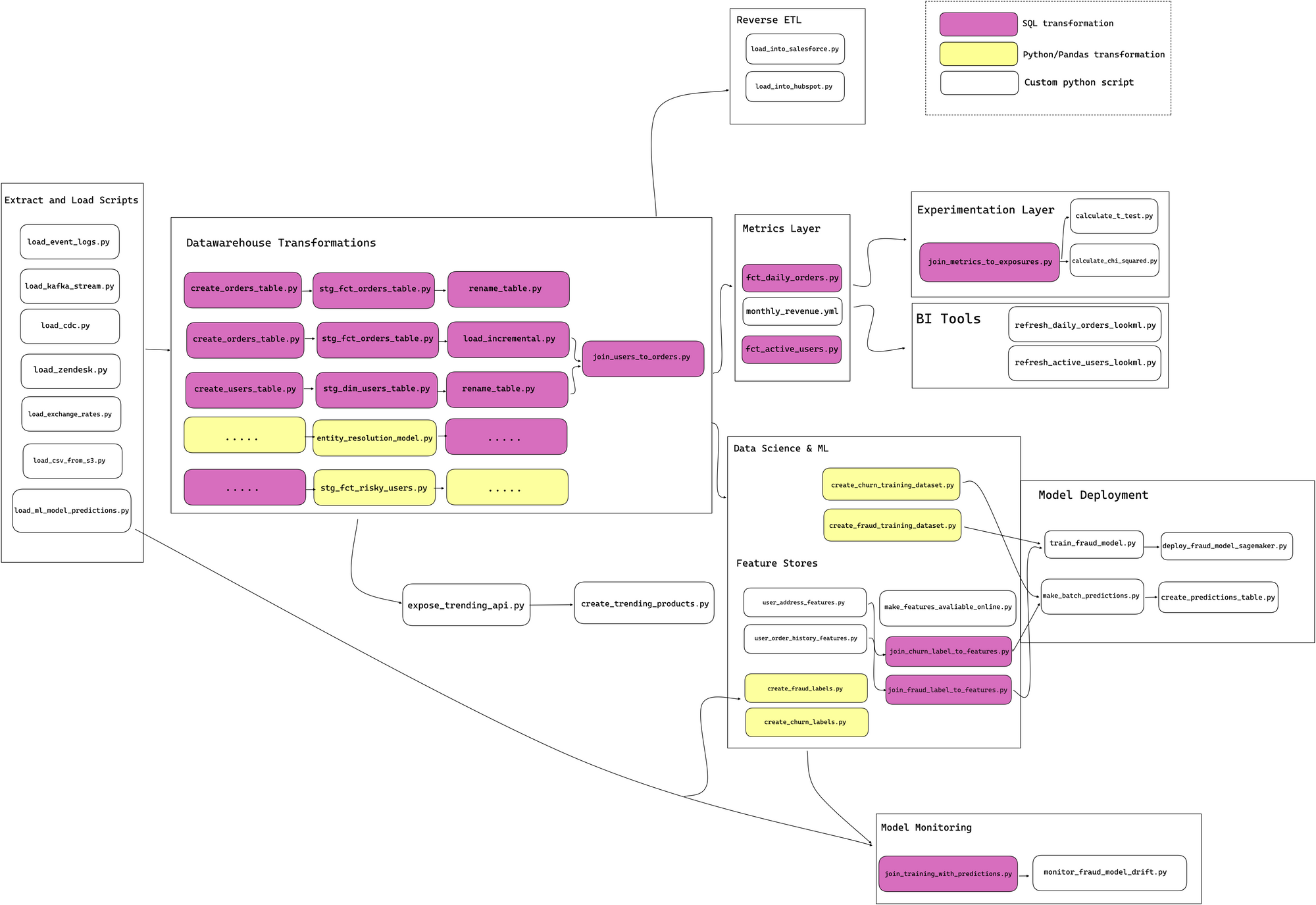

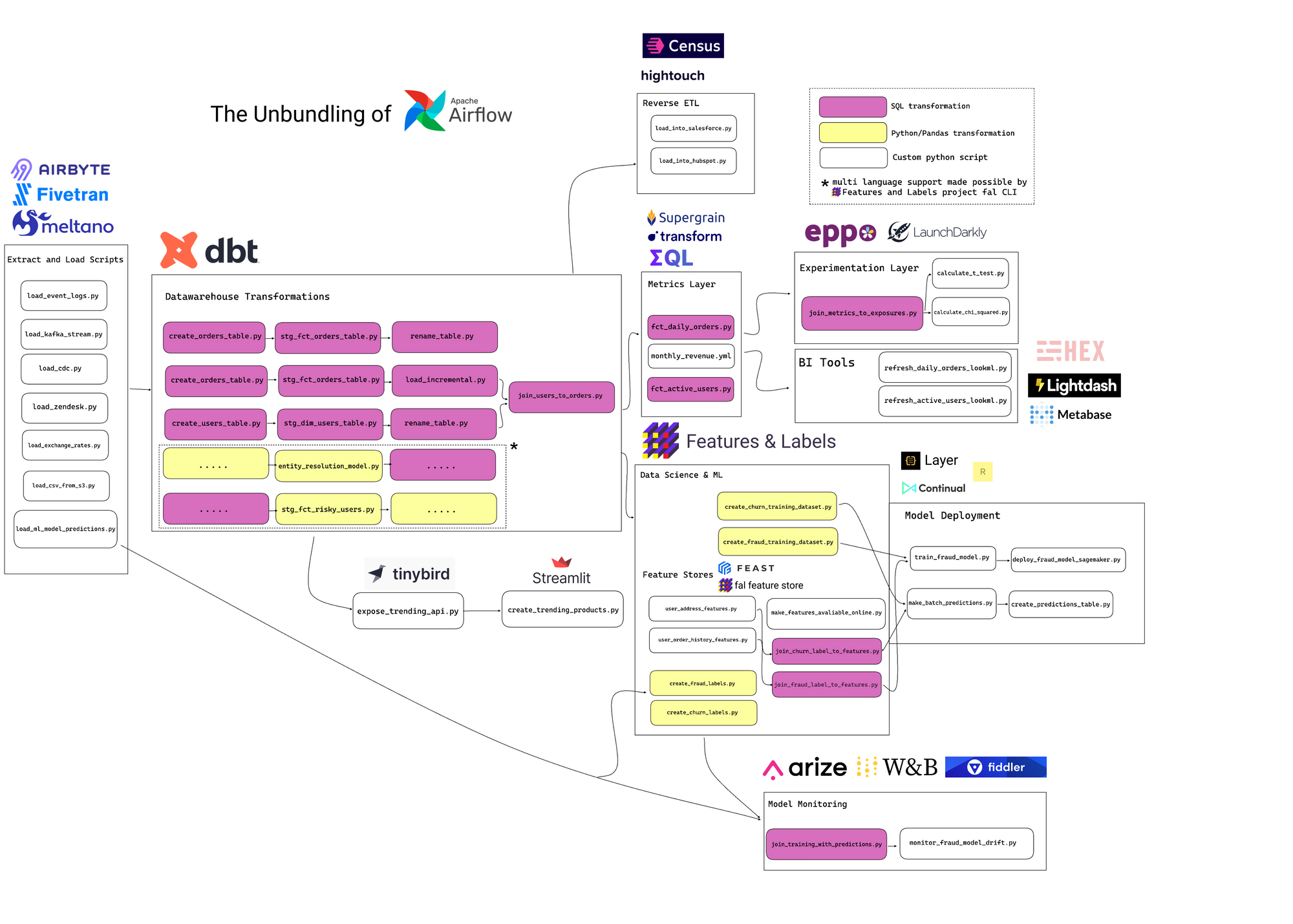

Before the fragmentation of the data stack, it wasn’t uncommon to create end-to-end pipelines with Airflow. Organizations used to build almost entire data workflows as custom scripts developed by in-house data engineers. Bigger companies even built their own frameworks inside Airflow, for example frameworks with dbt-like functionality for SQL transformations in order to make it easier for data analysts to write these pipelines.

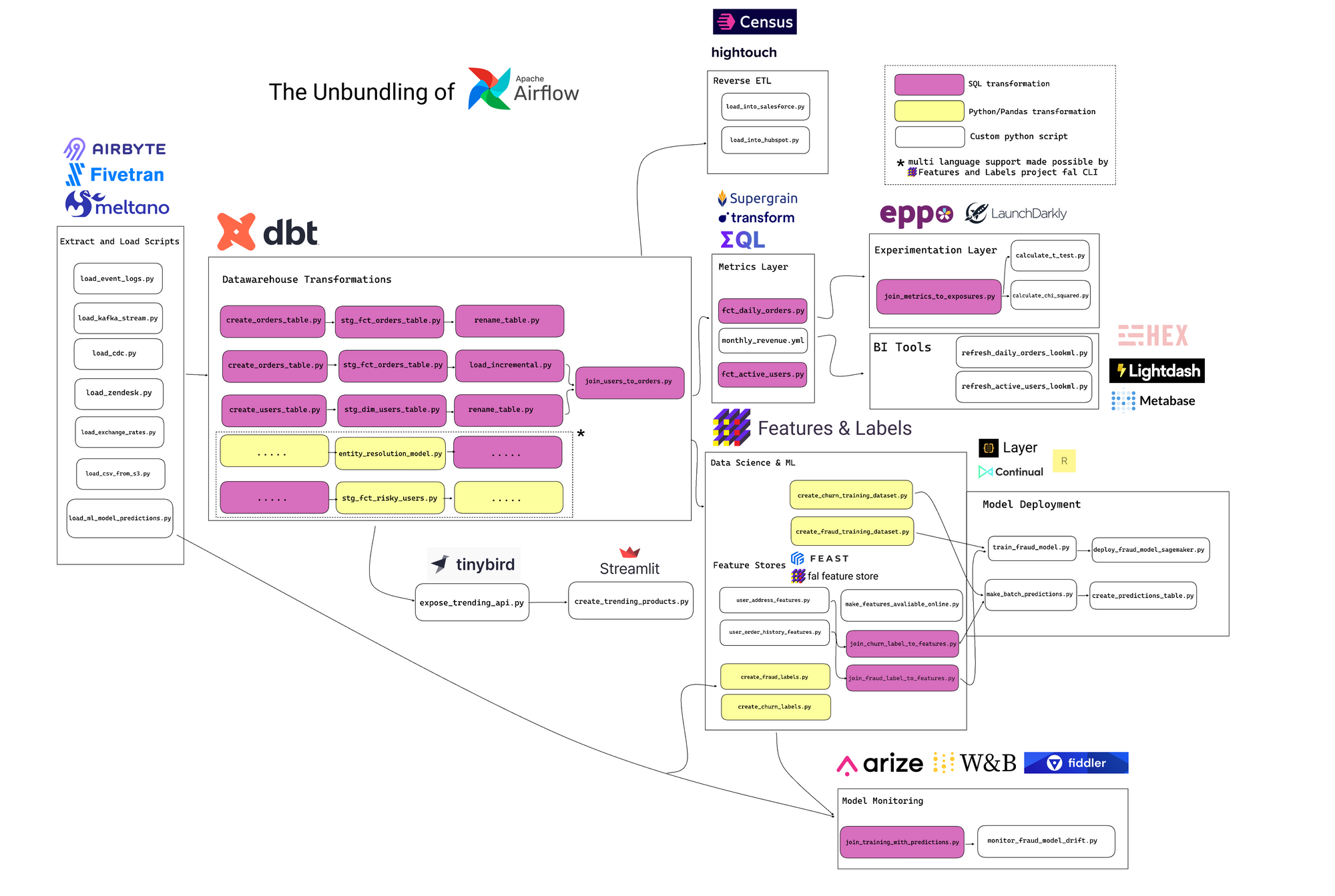

Today, data practitioners have many tools under their belt and only very rarely they have to reach for a tool like Airflow. Fivetran and Airbyte took care of the extract and load scripts that one might write with Airflow. dbt came for the data transformations, Census and Hightouch for Reverse ETL. Metrics and experimentation layers are also getting their own focused tooling; metrics with tools like Transform, Metriql, Supergrain and experimentation with Eppo. Certain companies relied on Airflow for data science and ML workloads, but with the popularity of MLOps, that layer is also being abstracted out. Open source tools like Feast are unbundling best practices for feature management that used to exist as independent Python scripts.

If the unbundling of Airflow means all the heavy lifting is done by separate tools, what is left behind?

The missing gap is that we still don’t have a good solution for things that need to happen in non-SQL languages. For example, adding pandas data transformations within a dbt DAG is still a non-trivial task. Another example is the entity resolution scenario that Tristian from dbt Labs points out. It is also very common to have Python nodes in a DAG that are not transformations. Such nodes might calculate risky users with an ML model, write predictions to a table that is then further processed by SQL transformations. We are working on this problem at Features and Labels by adding bilingual support to dbt.

Another big responsibility is scheduling, but this is a relatively simple task. EL is usually the starting point of a data pipeline so I can see EL tools (or even dbt Cloud) taking this on without Airflow. People really like the Airflow UI, it provides a holistic view of the whole system to easily discover what has failed, and re-run jobs when necessary. This is a valid point, but the holistic view has diminishing returns as more complexity is moved inside other tools. For example if an Airflow workflow is invoking a complicated dbt Cloud job the complexity of the dbt DAG is not observable from the Airflow UI.

Then there are tools that are trying to become a better Airflow by making it easier to deploy tasks or being more scalable or containerized or asset driven... It’s hard to make predictions in the ever evolving data landscape, but I am not sure if we need a better Airflow. Building a better Airflow feels like trying to optimize writing code that shouldn’t have been written in the first place.

A diverse set of tools is unbundling Airflow and this diversity is causing substantial fragmentation in modern data stack. Like everyone else, I also predict some consolidation of these tools in the coming years. I believe dbt Cloud is the best positioned place for this consolidation to happen. More on this in the next post!

Special thanks to Rajko Radovanović (@rajko_rad) on giving valuable feedback on this post.

Star us on GitHub if you enjoyed this post.