FLUX.2 Is Now Available on fal

We’re pleased to announce the release of FLUX.2 , the newest state-of-the-art image generation and editing model, now available on fal on day 0. FLUX.2 pushes visual quality, realism, and controllability forward in a major way.

FLUX.2 Pro comes in two powerful models:

- Text-to-Image: https://fal.ai/models/fal-ai/flux-2-pro/edit

- Image Editing: https://fal.ai/models/fal-ai/flux-2-pro/

Together, these models set a new standard for image generation and editing. With FLUX.2 you can expect higher resolutions (up to 4MP), and new capabilities like multi image referencing, precise color control using HEX codes and JSON prompting.

Key Model Details

FLUX.2 Pro is the high-fidelity, production-ready version of the model. It delivers the strongest image quality and most reliable prompt adherence, ideal for professional workflows, campaigns, and any scenario where consistency and realism matter.

FLUX.2 Flex is the customizable version of the model built for creators who want full control over generation behavior. It allows you to adjust steps, guidance, and other settings, making it ideal for workflows that require fine-tuned precision rather than fixed defaults. Flex also delivers noticeably stronger typography and text rendering, and supports up to 10 reference images,with a total input capacity of up to 14 MP, giving you more flexibility when working with complex compositions or multi-image prompts.

FLUX.2 Dev is the open-weights version designed for experimentation and customization. It’s lighter, easier to run locally, and fully supports LoRA training, making it perfect for researchers, developers, and creators who want to fine-tune or extend the model.

FLUX.2 introduces support for up to 10 reference images, giving you control over identity, product continuity, and creative direction. You can lock in a model, a product, a camera angle, a lighting setup (or any combination of these) and generate a cohesive series of images that stay consistent.

Use Cases

Product Photography

One of the biggest improvements from FLUX.1 to FLUX.2 is its dramatically improved product photoshoot capability. As seen in the Armani fragrance example below, FLUX.2 performs great lifestyle photography producing natural freckles and lifelike skin texture, while keeping the product perfectly consistent across angles and lighting.

"Make the woman hold the Armani Fragrannce"

Precise color control

Here, FLUX.2 demonstrates accurate color reproduction and fabric texture retention. The model understands HEX codes, allowing you to specify an exact value and generate any product in the precise color you need. This level of control is especially powerful for product photography, where accurate color representation directly impacts customer trust.

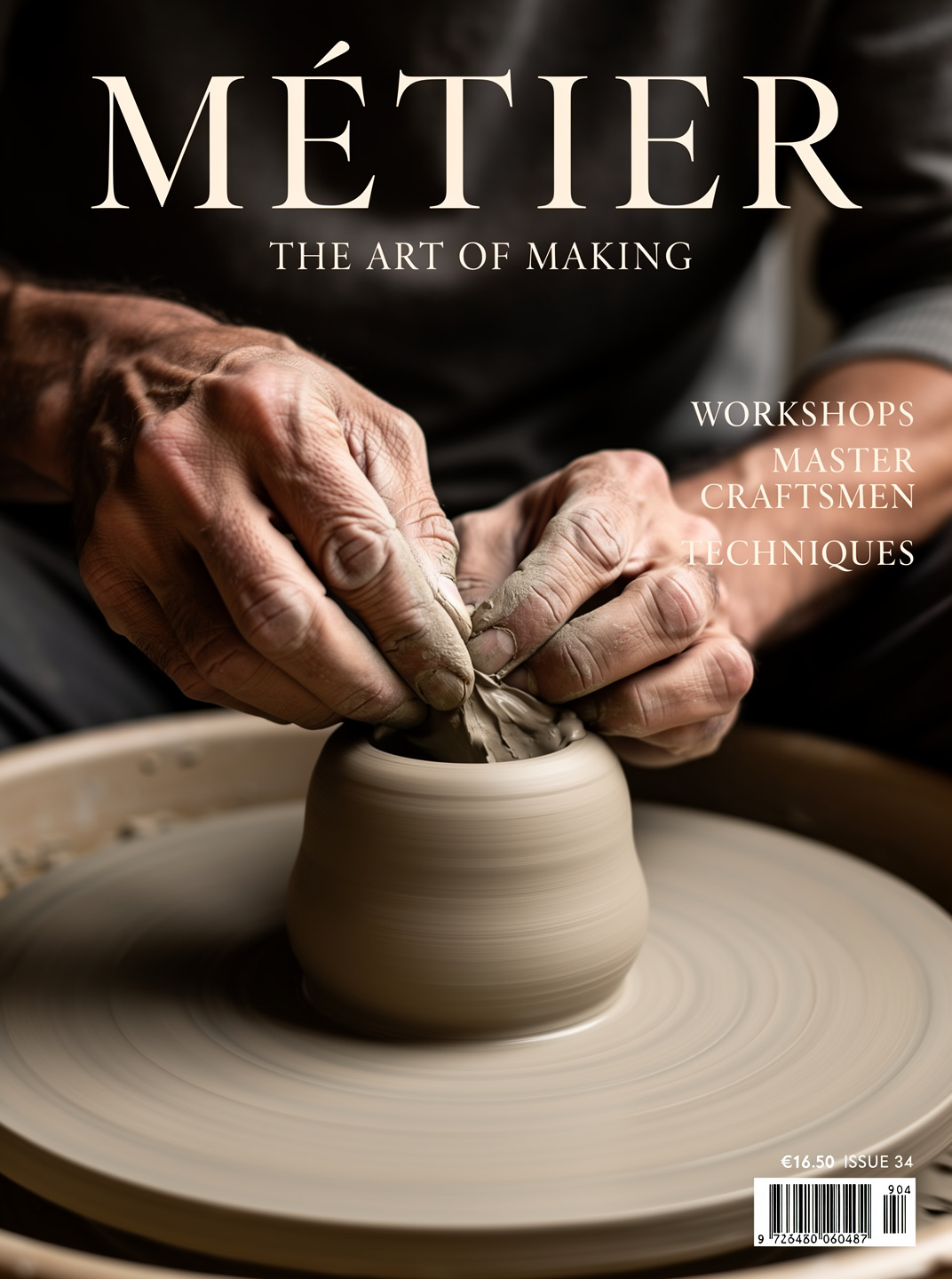

Editorial Shots

Editorial-style photography is one of FLUX.2’s strongest capabilities. The pottery magazine cover example below highlights its ability to produce hyper-realistic, cinematic images with rich depth of field and lifelike detail. FLUX.2 also handles text exceptionally well delivering great on text placement, even creating a realistic barcode.

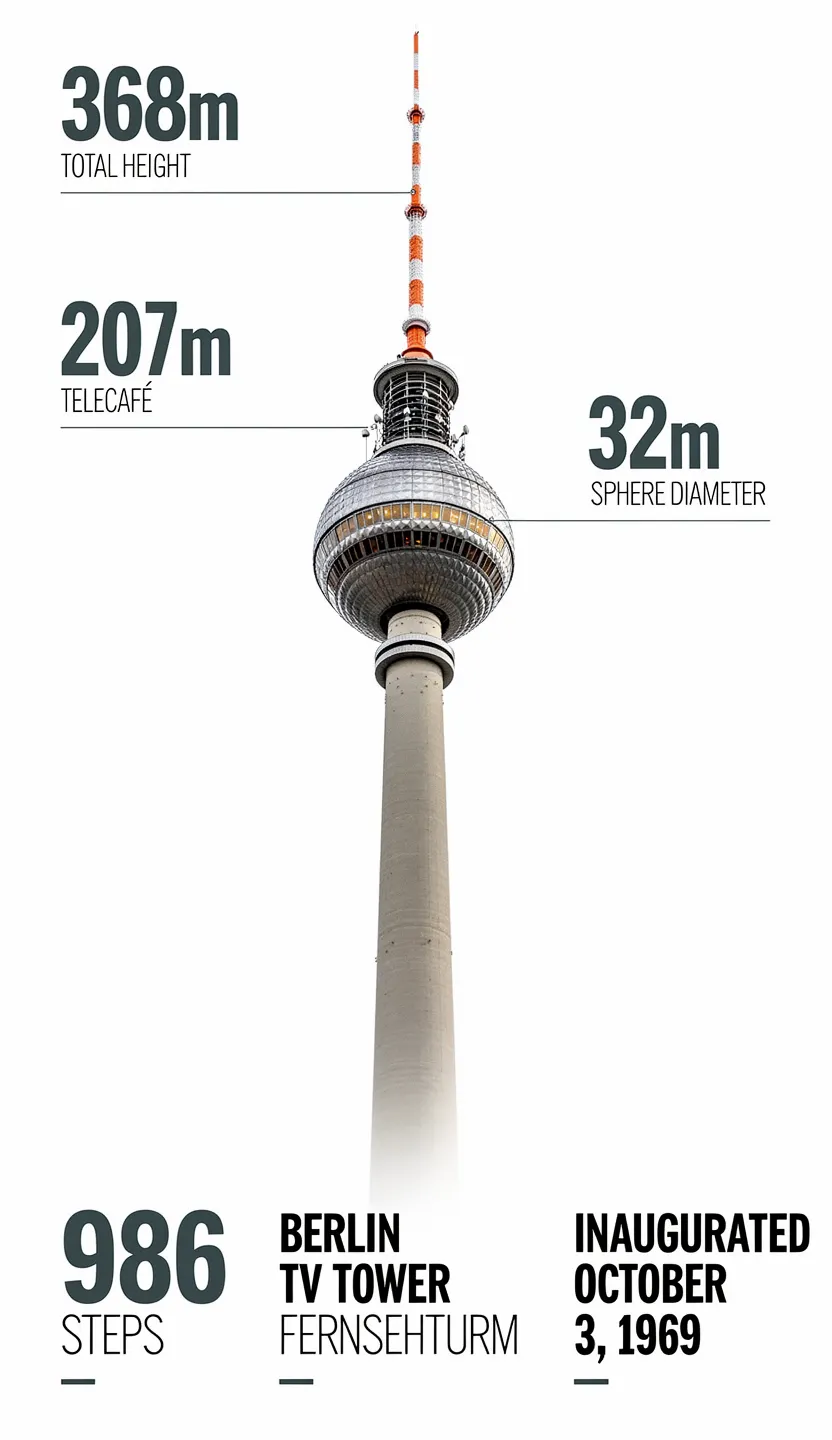

Infographics

FLUX.2 is significantly better at generating text, embedding it cleanly into complex compositions like diagrams, labels, and infographic overlays . The Berlin TV Tower example below highlights how well the model handles structured information: maintaining alignment, keeping the subject sharp, and generating text with consistent typography.

Stylized Illustrations

FLUX.2 handles stylized work just as well as its photorealistic shots. It can shift effortlessly between 2D anime, hand-drawn illustration, and bold comic-book aesthetics, giving you plenty of creative range no matter the project.

FLUX.2 Endpoints

Flex:

https://fal.ai/models/fal-ai/flux-2-flex/edit

https://fal.ai/models/fal-ai/flux-2-flex/

Pro

https://fal.ai/models/fal-ai/flux-2-pro/edit

https://fal.ai/models/fal-ai/flux-2-pro/

Dev

https://fal.ai/models/fal-ai/flux-2/edit

https://fal.ai/models/fal-ai/flux-2/lora/edit

https://fal.ai/models/fal-ai/flux-2/

https://fal.ai/models/fal-ai/flux-2/lora

Foundational Prompting Framework

We recommend structuring prompts around:

- Subject —the core entity (person, product, object, character)

- Action — what the subject is doing, how they’re posed

- Style — the artistic or photographic aesthetic

- Context — environment, lighting, time of day, mood, composition

It is helpful to order details from most important → least important. Prioritize core information (subject, action) before style or atmosphere. This framework above is designed to produce highly predictable, photorealistic outcomes and works really well with model.

JSON Structured Image Generation Prompts

FLUX.2 is trained to understand structured JSON prompts. While the model does not enforce a schema for your inputs, JSON prompting makes writing prompts for targeted output control significantly easier.

Below we provided a recommended base schema that we experienced to perform well on most tasks. But feel free to use your own.

Show JSON Schema

{

"type": "object",

"properties": {

"scene": {

"type": "string",

"description": "Overall scene setting or location"

},

"subjects": {

"type": "array",

"items": {

"type": "object",

"properties": {

"type": {

"type": "string",

"description": "Type of subject (e.g., desert nomad, blacksmith, DJ, falcon)"

},

"description": {

"type": "string",

"description": "Physical attributes, clothing, accessories"

},

"pose": {

"type": "string",

"description": "Action or stance"

},

"position": {

"type": "string",

"enum": ["foreground", "midground", "background"],

"description": "Depth placement in scene"

}

},

"required": ["type", "description", "pose", "position"]

}

},

"style": {

"type": "string",

"description": "Artistic rendering style (e.g., digital painting, photorealistic, pixel art, noir sci-fi, lifestyle photo, wabi-sabi photo)"

},

"color_palette": {

"type": "array",

"items": { "type": "string" },

"minItems": 3,

"maxItems": 3,

"description": "Exactly 3 main colors for the scene (e.g., ['navy', 'neon yellow', 'magenta'])"

},

"lighting": {

"type": "string",

"description": "Lighting condition and direction (e.g., fog-filtered sun, moonlight with star glints, dappled sunlight)"

},

"mood": {

"type": "string",

"description": "Emotional atmosphere (e.g., harsh and determined, playful and modern, peaceful and dreamy)"

},

"background": {

"type": "string",

"description": "Background environment details"

},

"composition": {

"type": "string",

"enum": [

"rule of thirds",

"circular arrangement",

"framed by foreground",

"minimalist negative space",

"S-curve",

"vanishing point center",

"dynamic off-center",

"leading leads",

"golden spiral",

"diagonal energy",

"strong verticals",

"triangular arrangement"

],

"description": "Compositional technique"

},

"camera": {

"type": "object",

"properties": {

"angle": {

"type": "string",

"enum": ["eye level", "low angle", "slightly low", "bird's-eye", "worm's-eye", "over-the-shoulder", "isometric"],

"description": "Camera perspective"

},

"distance": {

"type": "string",

"enum": ["close-up", "medium close-up", "medium shot", "medium wide", "wide shot", "extreme wide"],

"description": "Framing distance"

},

"focus": {

"type": "string",

"enum": ["deep focus", "macro focus", "selective focus", "sharp on subject", "soft background"],

"description": "Focus type"

},

"lens": {

"type": "string",

"enum": ["14mm", "24mm", "35mm", "50mm", "70mm", "85mm"],

"description": "Focal length (wide to telephoto)"

},

"f-number": {

"type": "string",

"description": "Aperture (e.g., f/2.8, the smaller the number the more blurry the background)"

},

"ISO": {

"type": "number",

"description": "Light sensitivity value (comfortable range between 100 & 6400, lower = less sensitivity)"

}

}

},

"effects": {

"type": "array",

"items": { "type": "string" },

"description": "Post-processing effects (e.g., 'lens flare small', 'subtle film grain', 'soft bloom', 'god rays', 'chromatic aberration mild')"

}

},

"required": ["scene", "subjects"]

}

Currently JSON prompts are primarily used for image generation. For image editing we still recommend to follow Image to Image edit prompt best practices.

FLUX.2 LoRA Training

FLUX.2 on fal offers two powerful pathways for model customization: Text-to-Image LoRA training, which teaches the model a new style, character, product, or aesthetic; and (Multi) Image-to-Image LoRA training, which teaches the model how to transform one image into another. Both trainers are easy to use and designed to help creators and developers produce highly specialized behaviors inside FLUX.2, whether that’s a new illustration style, a character, or a domain-specific transformation.

Read our guide on how to train a FLUX.2 LoRA on fal here.

LoRA Training Endpoints

- Text-to-Image: https://fal.ai/models/flux-2-trainer/text-to-image

- Image Editing: https://fal.ai/models/fal-ai/flux-2-trainer/edit

Stay tuned to our Youtube, Reddit, blog, Twitter, or Discord for the latest updates on generative media and the new model releases!