AuraSR V2

Today we released the second version of our single step GAN upscaler: AuraSR.

We released AuraSR v1 last month and were encouraged by the community response so immediately started training a new version.

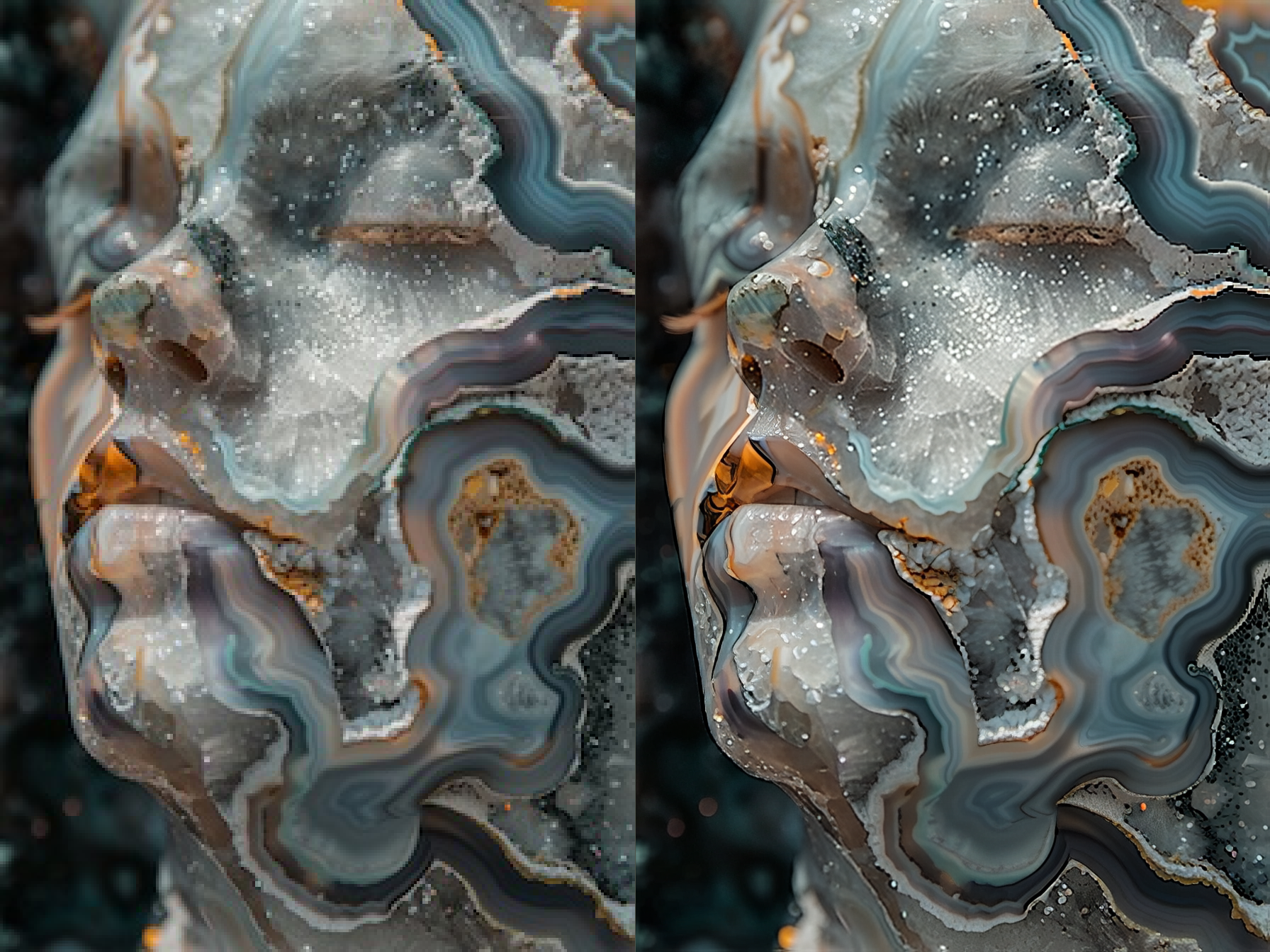

AuraSR is based on the Adobe Gigagan paper using lucidrain’s implementation as a starting point. The Gigagan upscaler was designed specifically for generated images and lacked degradation pre-processing during training. Thus, Aura SR was unable to upscale JPG compressed images without artifacts.

We saw people in the community wanted to use AuraSR for non-generated images with a plethora of different types of degradation, so for v2 we included a degradation process similar to ESRGAN training.

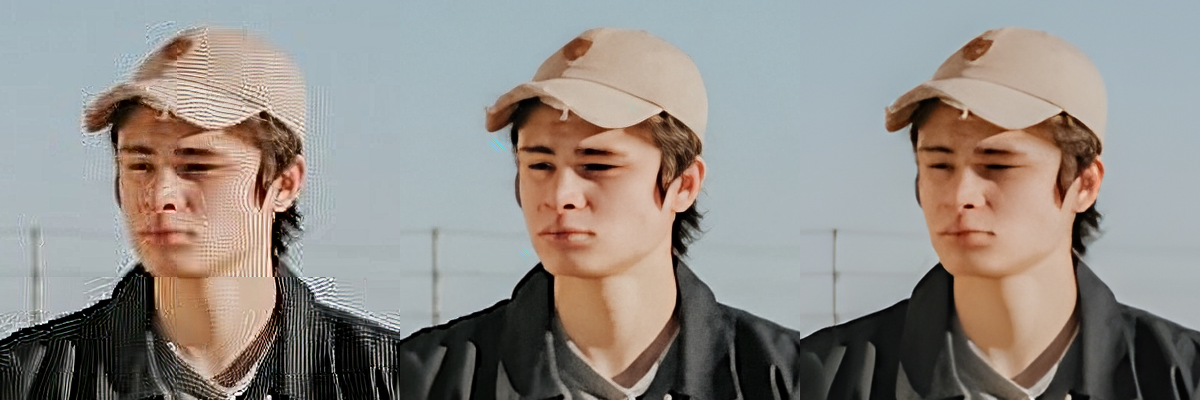

Additionally, we noticed that v1 tended to add too much detail. We attributed this issue to a mismatch between training and test data. When training v1, we would resize larger images to 256 as ground truth and resize again to 64 pixels for the low resolution input.

However, during inference v1 upscales 64 pixel tiles of a larger image. There is a noticeable difference in how much detail is in a small tile of an image vs the whole image. So for v2 training we use 256 pixel tiles of 1024 pixel images. This is brings the training much closer to how the model is used during inference.

We made one final improvement to address seams during inference. Seams occur because the inference uses non-overlapping tiles. For some images the seams are not noticeable, but for many it is a big problem. We updated our inference library aura to include a new inference method upscale_4x_overlapped which performs inference twice with overlapping tiles and averages the results to remove seams.

Aura SR v2 uses the same architecture as v1, so it should be a drop-in replacement. The model is available on Huggingface and has been deployed to fal’s AuraSR endpoint.

We're looking forward to training v3. We plan on using higher resolution images, more face images, as well as a brand new architecture. However, until then enjoy AuraSR v2!